Delivery methods

The Nimble SERP API supports three delivery methods:

For real-time delivery, see our page on performing a real-time search request. To use Cloud or Push/Pull delivery, use an asynchronous request instead. Asynchronous requests also have the added benefit of running in the background, so you can continue working without waiting for the job to complete.

To send an asynchronous request, use the /async/serp endpoint with the following syntax:

Nimble APIs requires that a base64 encoded credential string be sent with every request to authenticate your account. For detailed examples, see Web API Authentication.

curl -X POST 'https://api.webit.live/api/v1/async/serp' \

--header 'Authorization: Basic <credential string>' \

--header 'Content-Type: application/json' \

--data-raw '{

"search_engine": "google_search",

"country": "US",

"query": "Sample search phrase",

"storage_type": "s3",

"storage_url": "s3://sample-bucket",

"callback_url": "https://my.callback/path"

}'import requests

url = 'https://api.webit.live/api/v1/async/serp'

headers = {

'Authorization': 'Basic <credential string>',

'Content-Type': 'application/json'

}

data = {

"search_engine": "google_search",

"country": "FR",

"locale": "fr",

"query": "Sample search phrase",

"storage_type": "s3",

"storage_url": "s3://sample-bucket",

"callback_url": "https://my.callback/path"

}

response = requests.post(url, headers=headers, json=data)

print(response.status_code)

print(response.json())

Request Options

Asynchronous requests share the same parameters as real-time requests, but also include storage and callback parameters.

query

Required

String

The term or phrase to search for.

search_engine

Required

Enum: google_search | bing_search | yandex_search

The search engine from which to collect results.

tab

Optional (default = null)

Enum: news

When using google_search , setting tab to news will provide Google News results instead of standard search results.

num_results

Optional

Integer

Set the mount of retuned search results

domain

Optional

String

Search through a custom top-level domain of Google. eg: "co.uk"

country

Optional (default = all)

String

Country used to access the target URL, use ISO Alpha-2 Country Codes i.e. US, DE, GB

state

Optional

String

For targeting US states (does not include regions or territories in other countries). Two-letter state code, e.g. NY, IL, etc.

city

Optional

String

For targeting large cities and metro areas around the globe. When targeting major US cities, you must include state as well. Click here for a list of available cities.

locale

Optional (default = en)

String

String | LCID standard locale used for the URL request. Alternatively, user can use auto for automatic locale based on country targeting.

location

Optional

String

Search Google through a custom geolocation, regardless of country or proxy location. eg: "London,Ohio,United States". See Getting local data for more information.

parse

Optional (default = true)

Boolean

Instructs Nimble whether to structure the results into a JSON format or return the raw HTML.

ads_optimization

Optional (default = false)

Boolean

This flag increases the number of paid ads (sponsored ads) in the results. It works by running the requests in 'incognito' mode.

storage_type

Required

ENUM: s3 | gs

Use s3 for Amazon S3 and gs for Google Cloud Platform

storage_url

Required

String

Repository URL: s3://Your.Bucket.Name/your/object/name/prefix/ | Output will be saved to TASK_ID.json

callback_url

Optional

String

A url to callback once the data is delivered. Nimble APIs will send a POST request to the callback_url with the task details once the task is complete (this “notification” will not include the requested data).

storage_compress

Optional (default = false)

Boolean

When set to true, the response saved to the storage_url will be compressed using GZIP format. This can help reduce storage size and improve data transfer efficiency. If not set or set to false, the response will be saved in its original uncompressed format.

Setting GCS/AWS access permissions

GCS Repository Configuration

In order to use Google Cloud Storage as your destination repository, please add Nimble’s system user as a principal to the relevant bucket. To do so, navigate to the “bucket details” page in your GCP console, and click on “Permission” in the submenu.

Next, past our system user [email protected] into the “New Principals” box, select Storage Object Creator as the role, and click save.

That’s all! At this point, Nimble will be able to upload files to your chosen GCS bucket.

S3 repository configuration

In order to use S3 as your destination repository, please give Nimble’s service user permission to upload files to the relevant S3 bucket. Paste the following JSON into the “Bucket Policy” (found under “Permissions”) in the AWS console.

Follow these steps:

1. Go to the “Permissions” tab on the bucket’s dashboard:

2. Scroll down to “Bucket policy” and press edit:

3. Paste the following bucket policy configuration into your bucket:

Important: Remember to replace “YOUR_BUCKET_NAME” with your actual bucket name.

Here is what the bucket policy should look like:

4. Scroll down and press “Save changes”

S3 Encrypted Buckets

If your S3 bucket is encrypted using an AWS Key Management Service (KMS) key, additional permissions to those outlined above are also needed. Specifically, Nimble's service user will need to be given permission to encrypt and decrypt objects using a KMS key. To do this, follow the steps below:

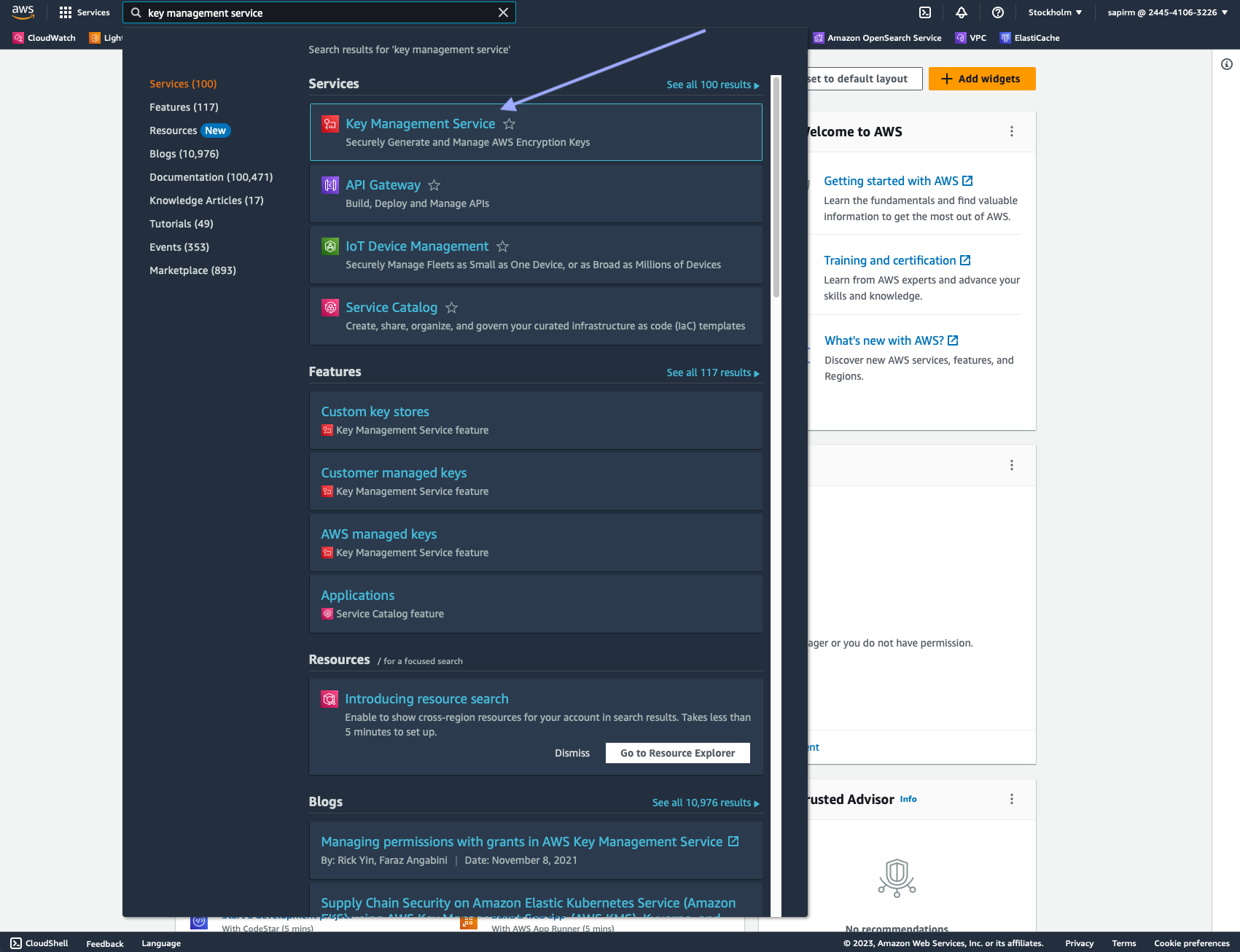

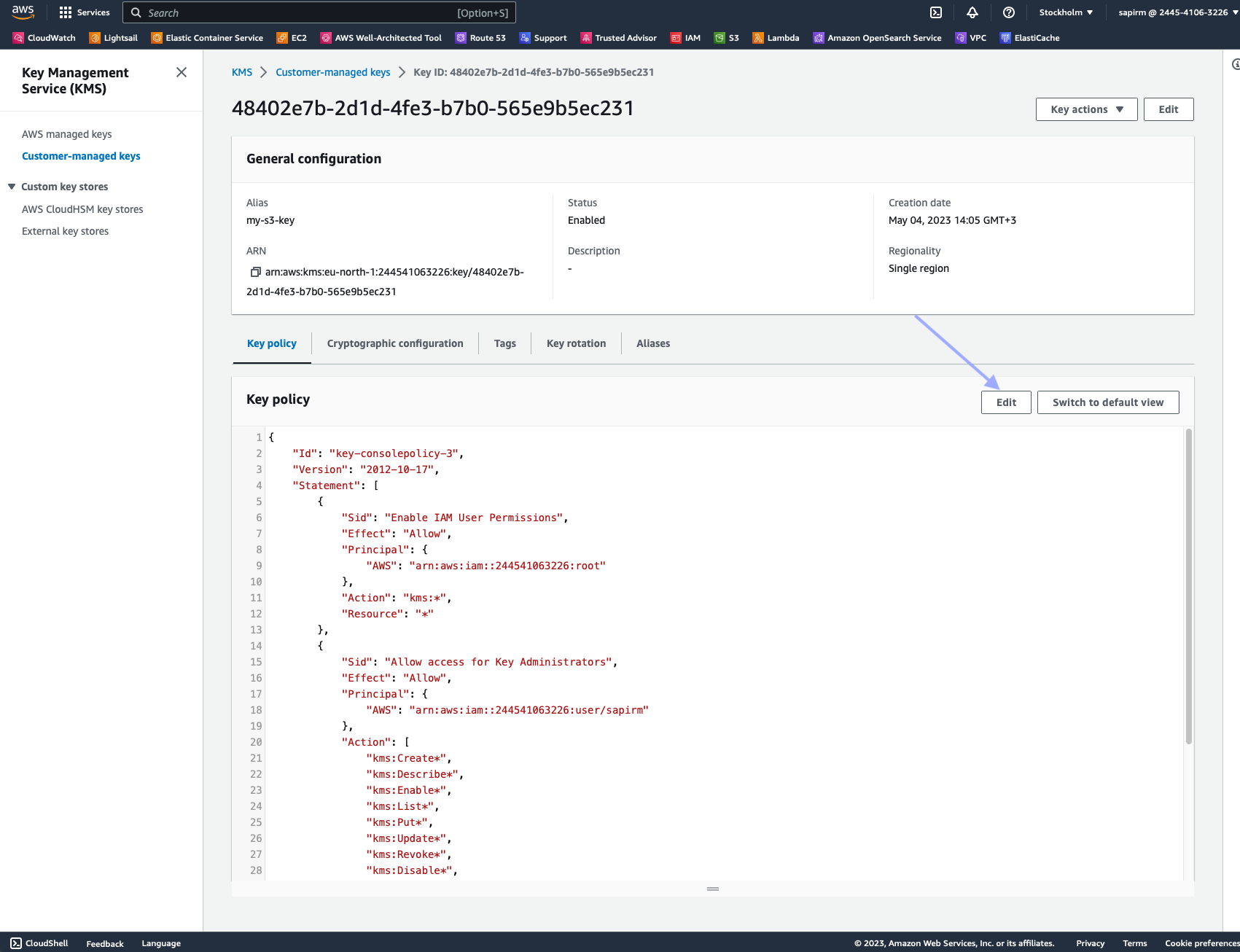

Sign in to the AWS Management Console and open the AWS Key Management Service (KMS) console.

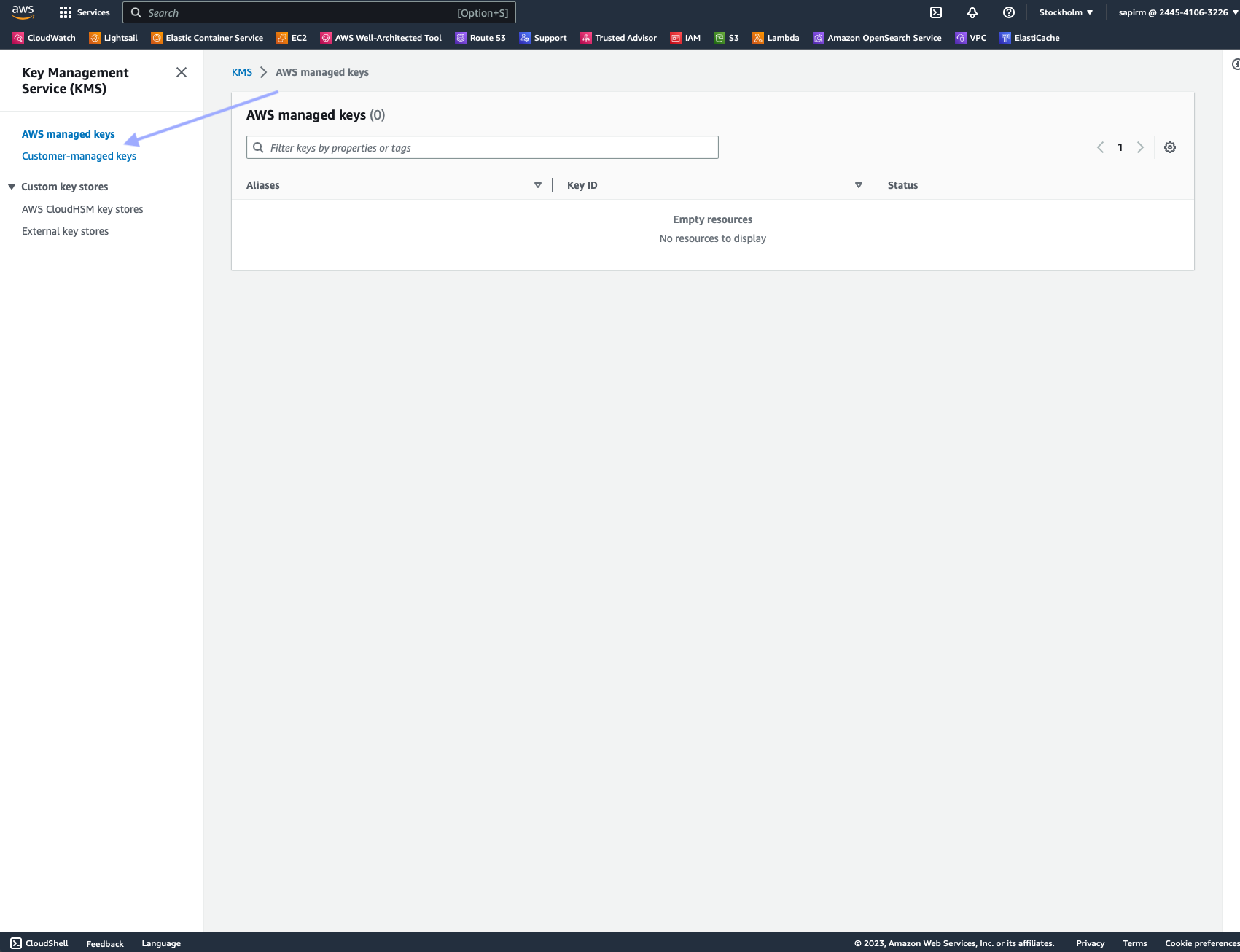

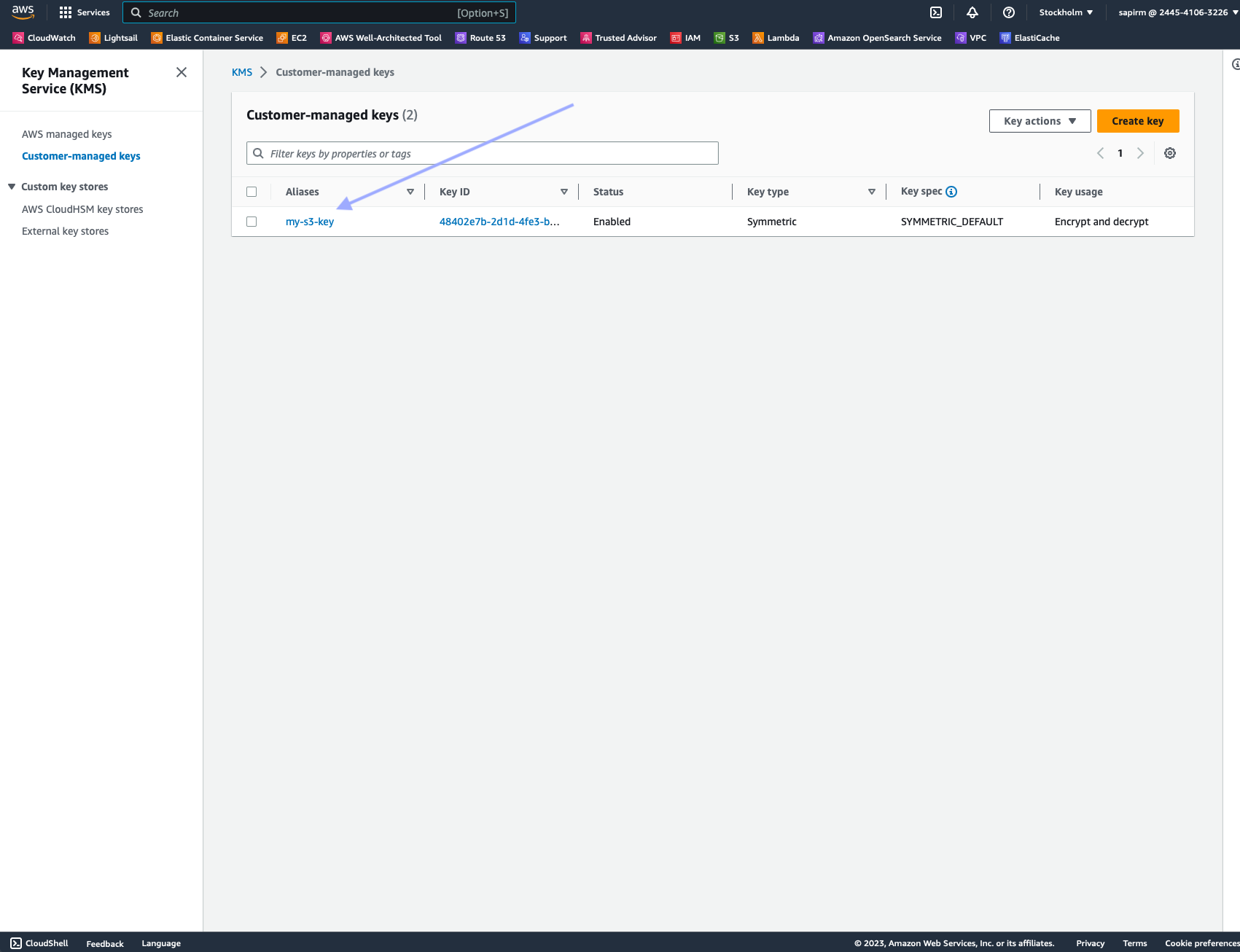

In the navigation pane, choose "Customer managed keys".

Select the KMS key you want to modify.

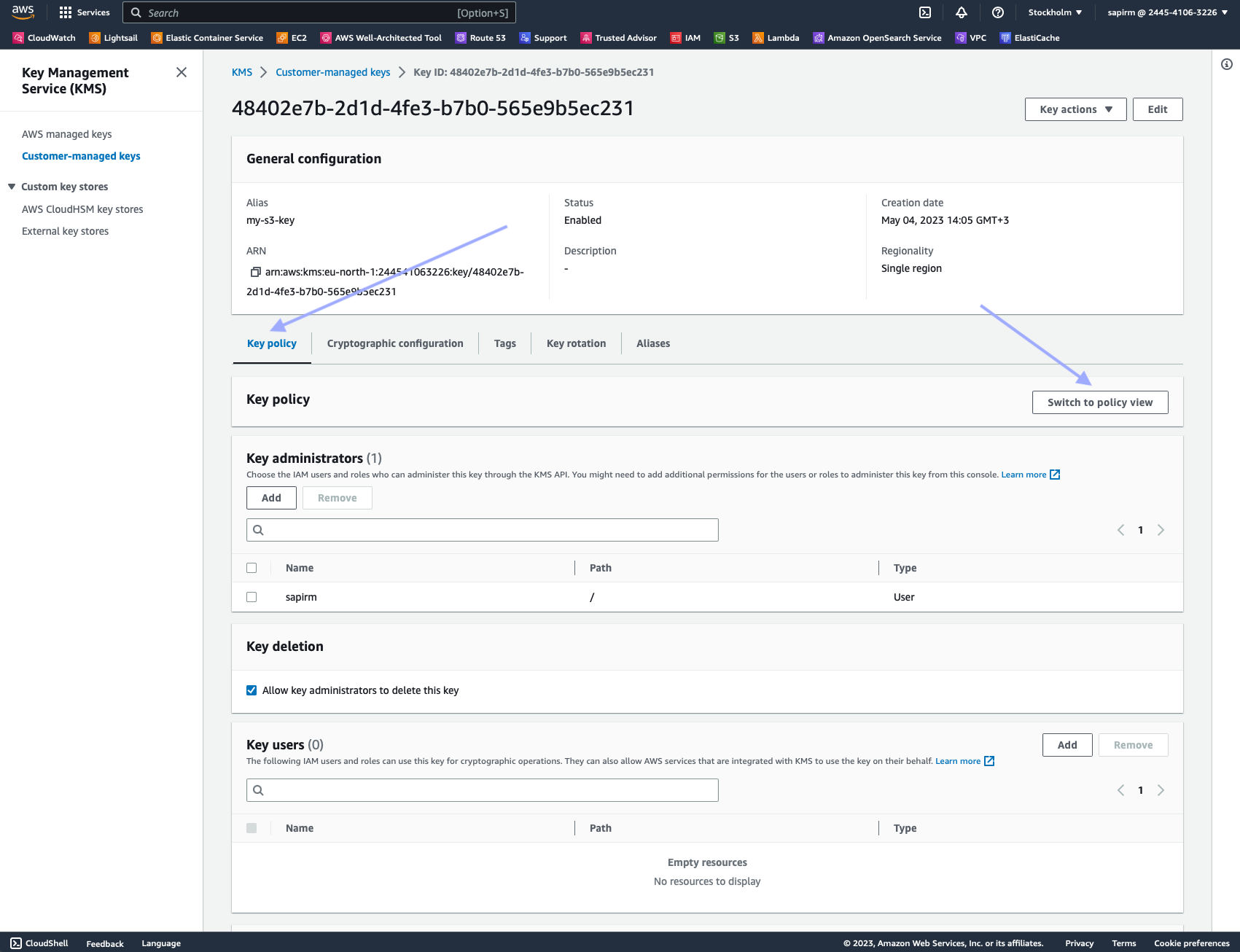

Choose the "Key policy" tab, then "Switch to policy view".

Click "Edit".

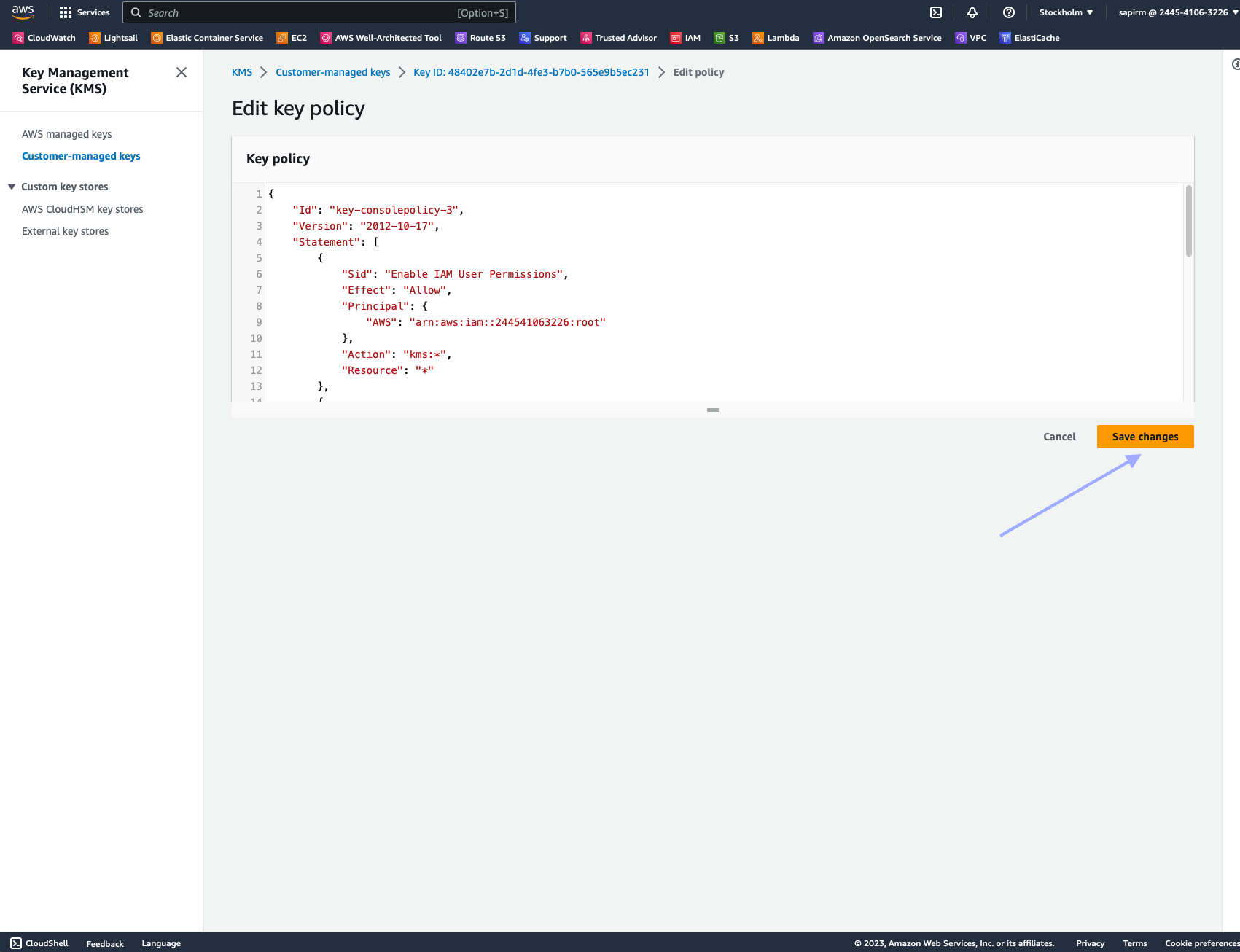

Add the following statement to the existing policy JSON, ensuring it's inside the Statement array:

Click "Save changes" to update the key policy.

That's it! You've now given Nimble APIs permission to encrypt and decrypt objects, enabling access to encrypted buckets.

Please add Nimble's system/service user to your GCS or S3 bucket to ensure that data can be delivered successfully.

Selecting a delivery method

To enable cloud delivery:

Set the storage_type parameter to either s3 or gs

Set the storage_url parameter to the bucket/folder URL of your cloud storage where you'd like the data to be saved.

To enable Push/Pull delivery:

Leave both the storage_type and storage_url fields blank. Nimble will automatically recognize that Push/Pull delivery has been selected.

Response

Initial Response

When an asynchronous request is initiated, an initial response with a state of “pending” is served to the user, along with a TaskID that can be used to check the task’s progress. The TaskID is also included in the result file name, for easier tracking of the result file in the destination repository.

200 OK

Checking Task Status

Asynchronous tasks can be in one of four potential states:

success

Task was complete and stored in the destination repository.

failed

Nimble was unable to complete the task, no file was created in the repository. A failed state will also be returned in case of unidentified or expired TaskID.

pending

The task is still being processed.

uploading

The results are being uploaded to the destination repository.

To check the status of an asynchronous task, use the following endpoint:

GET https://api.webit.live/api/v1/tasks/<task_id>

For example:

The response object has the same structure as the Task Completion object that is sent to the callback_url upon task completion.

Checking Tasks List

To check the status of asynchronous tasks list, use the endpoint

Path https://api.webit.live/api/v1/tasks/list

Parameters

limit

Optional (default = 100)

Number | List item limit

cursor

Optional

String | Cursor for pagination.

Example Request:

Example Response:

The response objects within data has the same structure as the Task Completion object that is sent to the callback_url upon task completion.

For pagination, run until pagination.hasNext = false or pagination.nextCursor = null

Task Completion

A POST request will be sent to the callback_url once the task is complete. If you opted for Push/Pull delivery, the output_urlfield will contain the address from which your data can be downloaded.

Handling Cloud Delivery Failure

If an error is encountered in saving the result data to your cloud storage for any reason, the data will be available to download from Nimble’s servers for 24 hours. If this is the case, the task state will report “success”, but will include two additional fields – download_url and error. The download_urlfield will contain the address from which your data can be downloaded.

500 Error

400 Input Error

Response Codes

200

OK

400

The requested resource could not be reached

401

Unauthorized/invalid credental string

500

Internal Service Error

501

An error was encountered by the proxy service

Last updated